Explainable Artificial Intelligence (XAI) in Chemistry and Psychology: Connecting Neurotransmitters to Drug Discovery and Mental-Health Screening

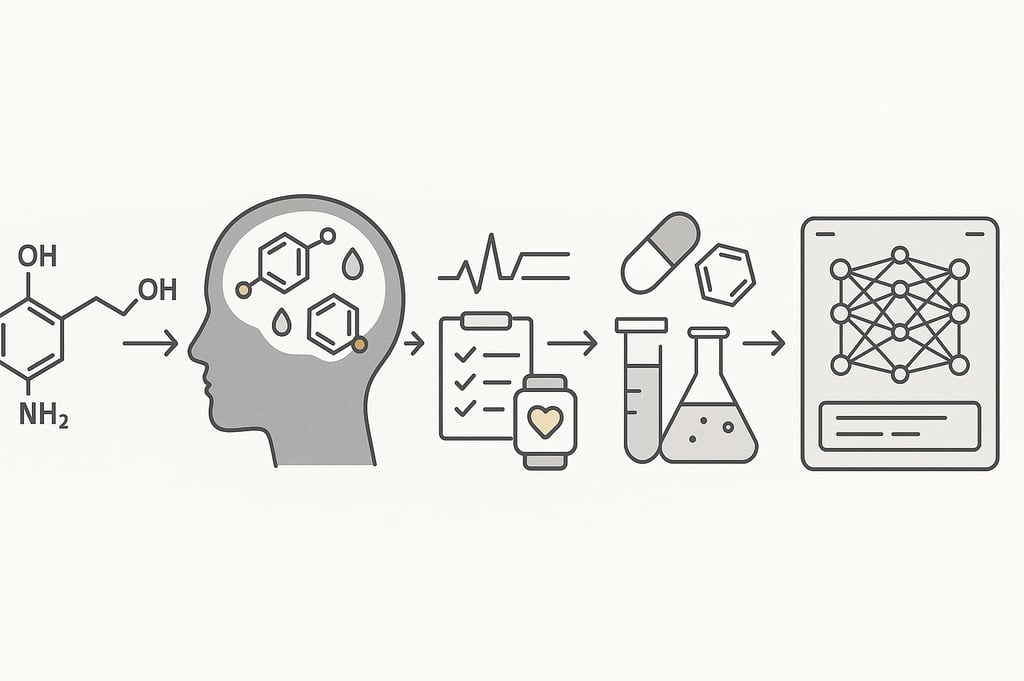

Artificial Intelligence (AI) continues to transform chemistry and psychology by optimizing discovery, diagnosis, and decision-making. However, the "black-box" nature of most models—generated outputs without interpretable reasoning—poses challenges to professional trust, reproducibility, and ethics. Explainable AI (XAI) seeks to remedy this by making machine reasoning transparent and interpretable to human users. This article explores how neurotransmitters link chemistry to psychology, why mental-health screening and drug discovery need transparency, and how XAI creates a bridge between molecular science and clinical decision-making.

DRUG DISCOVERYNATURAL PRODUCTS CHEMISTRYMACHINE LEARNING

Sha-Sonja Arcia

11/15/20254 min read

Explainable Artificial Intelligence (XAI) has become a cornerstone of modern scientific and healthcare innovation, ensuring transparency and accountability across complex AI systems. Within chemistry and psychology, the adoption of XAI bridges the gap between algorithmic precision and human understanding—particularly in drug discovery and mental-health diagnostics. XAI enhances predictive modeling, ethical oversight, and clinical trust across these domains. Research in AI-driven mental healthcare [1][4], generative explainability frameworks [2], predictive precision modeling [3], and neurochemical studies linking catecholamines to behavioral outcomes [5], alongside explainable AI approaches in drug design [6], demonstrates that explainability is fundamental for aligning computational intelligence with scientific integrity, patient safety, and ethical practice.

Mental Health Begins with Chemistry

Although mental health is often described in emotional or behavioral terms, the foundation is deeply chemical. Neurotransmitters such as dopamine, norepinephrine, and epinephrine (catecholamines) influence attention, motivation, stress response, and emotional regulation [5]. When their levels or signaling pathways are disrupted, symptoms of anxiety, depression, ADHD, or mood instability may emerge.

This demonstrates that mental states are not abstract concepts but biochemical processes occurring at the molecular level.

Bhattacharya [5] explains that understanding and measuring catecholamine dynamics is critical for linking chemical signaling to behavioral expression. This scientific perspective shows that chemistry and psychology are inseparable.

How Mental-Health Screening Fits into the Picture

Mental-health screening tools—digital platforms, questionnaires, wearable sensors, and AI-based systems—identify behavioral and physiological signals associated with neurochemical imbalance [1].

Fanarioti and Karpouzis [1] highlight the need for transparency and ethical governance in AI-enabled mental-health systems, emphasizing issues such as:

privacy and consent,

algorithmic bias,

equitable access,

clinician and patient trust,

the risk of dehumanization in care.

Kandala, Moharir, and Nayak [2] identify a “lab-to-clinic gap” where traditional explainability models such as SHAP and LIME produce statistical descriptions but not clinically meaningful interpretations. They propose a Generative Operational Framework using LLMs with Retrieval-Augmented Generation (RAG) to transform raw output into explanations that clinicians can interpret and act upon [2].

Transparency helps clinicians detect biases, understand treatment recommendations, and ensure ethical application of AI within sensitive psychological contexts.

Drug Discovery Targets the Same Chemical Pathways

What screening detects, drug discovery attempts to correct. Pharmacological treatment focuses on modifying neurotransmitter activity, whether through inhibition, stimulation, or receptor-level modulation.

AI-assisted drug discovery accelerates the search for compounds by predicting:

molecular interactions,

biological activity,

toxicity levels,

therapeutic suitability.

However, high-performance neural models are difficult to interpret.

Predictive chemistry applies AI and machine learning to anticipate molecular behaviors and drug responses before laboratory testing.

Qadri et al. [6] demonstrate how XAI methods illuminate connections between molecular descriptors, ADME properties, and drug-target interactions. Explainability enables chemists to understand which chemical features influence predictions, improving trust, reproducibility, and regulatory validation.

A Real-World Example: Dopamine and L-DOPA

Dopamine is a catecholamine neurotransmitter essential for movement, motivation, reward processing, and cognitive flexibility. Reduced dopamine signaling is associated with Parkinson’s disease, ADHD, and major depressive disorder [5].

L-DOPA, a chemical precursor to dopamine, is widely used to treat Parkinson’s symptoms. While effective for restoring motor function, it can cause behavioral side effects such as:

hallucinations,

compulsivity,

mood swings.

This demonstrates how chemical interventions designed to improve function can also alter behavior in complex and unpredictable ways.

Explainable AI can help researchers:

predict individual drug responses,

identify side-effect mechanisms,

optimize compounds toward safer outcomes [6].

How XAI Connects Screening and Drug Discovery

Here is the bridge:

Chemistry explains molecular structure.

Neurochemistry explains how neurotransmitters regulate behavior.

Mental-health screening detects behavioral signatures of chemical imbalance.

Drug discovery designs compounds that intervene in chemical pathways.

XAI provides transparency across each layer.

Explainable models in chemistry ensure that AI-generated compounds can be safely understood, reproduced, and validated.

XAI unites chemistry and psychology by making chemical effects on behavior explainable, measurable, and clinically meaningful. This supports the emerging goal of precision psychiatry, where treatment is tailored to real biological signatures.

Future Directions

Future development in XAI should emphasize:

integration of RAG-enabled interpretability into pharmacological and clinical databases;

participatory co-design with chemists, clinicians, researchers, and patients;

longitudinal evaluation of how explanations influence clinical and scientific outcomes;

regulatory standards for transparency, reproducibility, and scientific accountability.

Ultimately, sustainable progress in AI depends on harmonizing computational complexity with human comprehension.

Conclusion

Explainable Artificial Intelligence stands as the foundation of ethical, interpretable innovation across chemistry and psychology. From molecular drug modeling to mental-health diagnostics, explainability enhances transparency, reproducibility, and public trust. Realizing the full potential of XAI requires interdisciplinary cooperation that unites data science, ethics, and human empathy—ensuring that AI serves both discovery and humanity.

References

[1] A. K. Fanarioti and K. Karpouzis, Artificial Intelligence and the Future of Mental Health in a Digitally Transformed World, Computers, 2025.

[2] R. Kandala, A. K. Moharir, and D. A. Nayak, From Explainability to Action: A Generative Operational Framework for Integrating XAI in Clinical Mental Health Screening, arXiv, 2025.

[3] S. Omiyefa, Artificial Intelligence and Machine Learning in Precision Mental Health Diagnostics and Predictive Treatment Models, Int. J. Res. Publ. Rev., 2025.

[4] H. R. Saeidnia et al., Ethical Considerations in Artificial Intelligence Interventions for Mental Health, Social Sciences, 2024.

[5] A. Bhattacharya, Bridging the Gap: Understanding the Significance of Catecholamines in Neurochemistry and Recent Advances in Their Detection, Science Reviews – Biology, 2023.

[6] Y. A. Qadri et al., Explainable Artificial Intelligence: A Perspective on Drug Discovery, Pharmaceutics, 2025.

Disclaimer: This article is intended for educational and informational purposes only. It summarizes current research and emerging perspectives on explainable artificial intelligence (XAI), neurochemistry, mental-health screening, and pharmaceutical discovery. XAI remains a developing field, and many of the concepts discussed are active areas of research rather than established or definitive conclusions. Scientific understanding may evolve as new evidence becomes available. Nothing in this article should be interpreted as medical advice, clinical guidance, or pharmaceutical instruction. Readers should consult qualified healthcare or scientific professionals before applying any ideas or examples referenced. The author and ReaxionLab assume no liability for health and/or legal outcomes resulting from the use of this information.

Address

Agartala, TR-IN 799003

Contact Us

© 2025 ReaxionLab - All Rights Reserved.