Predictive Thermodynamics: Using Machine Learning to Model Energy Landscapes and Physical Laws

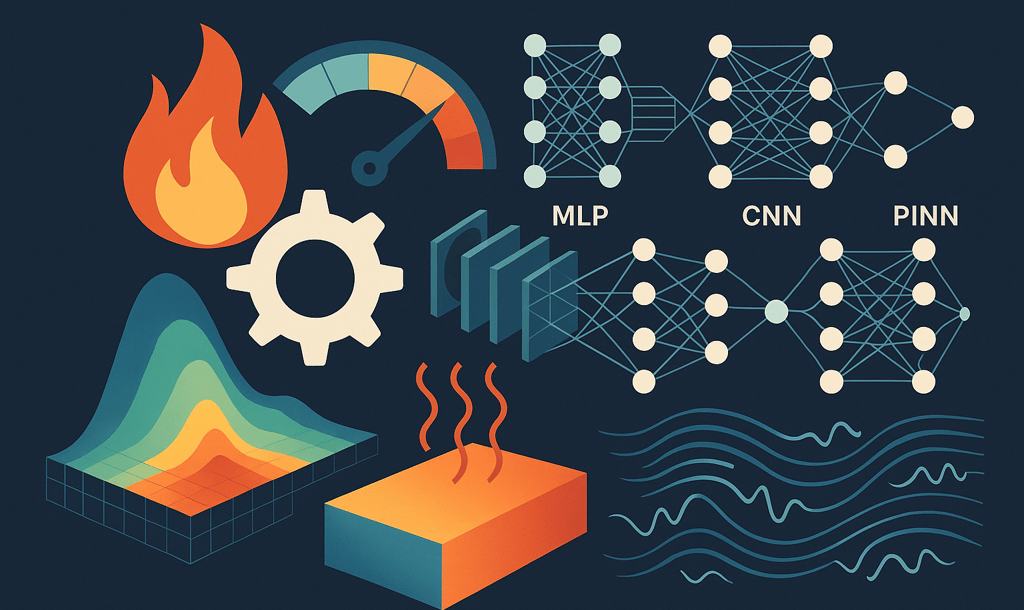

Fundamental to both science and engineering, thermodynamics is based on the concepts of entropy and energy conservation. Powerful tools for modeling and optimizing thermodynamic systems are provided by recent developments in machine learning (ML), especially when combined with constraints derived from physics. This article reviews methods such as multi-layer perceptrons, convolutional neural networks, and physics-informed neural networks (PINNs) for predicting energy landscapes, heat conduction, and fluid dynamics. These hybrid methods preserve physical consistency, save computational costs, and increase accuracy by including governing equations into training objectives. Applications in engineering, chemistry, and materials science have shown improvements in predicted robustness, interpretability, and convergence speed.

THERMODYNAMICSMACHINE LEARNINGMATERIALS DISCOVERY

Anushya Krishnan

11/2/20256 min read

Thermodynamics and Machine Learning: Concepts and Approaches

Thermodynamics, governed by the fundamental laws of energy conservation and entropy, forms the foundation of a wide range of scientific and engineering disciplines. The first law states that energy cannot be created or destroyed, only transformed from one form to another, while the second law asserts that in a closed system, usable energy inevitably decreases over time, even though the total energy remains constant [1]. These principles describe how energy is transformed and degraded in physical systems but also pose challenges in accurately predicting and optimizing real-world processes. In recent years, machine learning (ML) has made significant progress in modeling and optimizing thermodynamic systems, though challenges such as accurately capturing temperature delay effects still limit overall precision and control [2]. By combining data-driven approaches with thermodynamic principles, researchers can improve both the accuracy and physical consistency of complex system models. For instance, multi-layer perceptrons have been shown to reliably approximate thermodynamic properties like entropy and its derivatives, enabling efficient simulations of non-ideal compressible flows without the need for memory-intensive lookup tables [3]. Likewise, hybrid methods that integrate deep convolutional neural networks (CNNs) with physics-informed loss functions based on the Laplace equation have achieved over 60% faster convergence in steady-state heat conduction problems while maintaining better adherence to physical laws [4].

Why this matters: These advances aren't just academic. They're transforming how engineers design everything from more efficient HVAC systems to safer chemical reactors. By predicting thermal behavior more accurately and quickly, ML-enhanced thermodynamic models reduce the need for costly physical prototypes and enable optimization that was previously impractical.

A key area where such data-driven and physics-informed methods find application is in the study of energy landscapes, multidimensional surfaces that describe how a system's energy varies with its configurational variables [5]. In molecular and materials science, such landscapes map all possible configurations of a system to their corresponding potential energies, forming basins of attraction associated with local minima and transitions between basins marked by saddle points. Tools like disconnectivity graphs facilitate visualization of these landscapes, providing insights into thermodynamic behavior and phase transitions [6]. Modeling energy landscapes is crucial in chemistry, materials discovery, and physics because it enables the prediction of reaction pathways, transition states, and molecular stability. In modeling heat conduction, hybrid approaches that combine machine learning with physics-based constraints have demonstrated improved temperature field predictions across variable geometries and boundary conditions, outperforming standalone ML models in both accuracy and physical fidelity [4].

An emerging technique, Physics-Informed Neural Networks (PINNs), integrates governing physical laws such as partial differential equations directly into the training process. Instead of learning purely from data, PINNs minimize a composite loss function that enforces both data accuracy and consistency with physics-based constraints. For example, in fluid or thermal systems, PINNs can model fields such as temperature, pressure, or velocity while satisfying laws like the Navier–Stokes or heat equations. Being inherently differentiable, PINNs enable gradient-based optimization of design parameters such as geometry or material properties without requiring adjoint solvers. Adaptive sampling of collocation points further enhances accuracy as the network converges, and PINNs have proven effective as surrogate models for high-cost simulations in areas such as airfoil shape optimization. This framework is also applicable to thermodynamic systems, allowing efficient prediction and optimization of energy landscapes while respecting conservation laws [7]. Furthermore, recent research in stochastic thermodynamics applied to neural networks indicates that the amount of information a model can generalize from data is fundamentally constrained by energy dissipation, providing a quantitative framework for assessing the thermodynamic efficiency of learning [8].

Real-world applications: Recent breakthroughs demonstrate the practical power of these methods. In drug design, ML-thermodynamic hybrid models now predict molecular properties with unprecedented accuracy, accelerating the identification of promising pharmaceutical candidates. In catalysis optimization, researchers have used computational thermodynamics combined with ML to discover highly efficient catalysts for hydrogen evolution and CO₂ reduction, processes critical for clean energy and climate change mitigation. These tools are also revolutionizing materials discovery, helping identify novel compounds with specific thermal or chemical properties in a fraction of the time traditional methods require.

Advances in Physics-Informed Thermodynamic Modeling

Recent studies also demonstrate that ML models, particularly when integrated with physical constraints, achieve significant improvements in both accuracy and computational efficiency across thermodynamic systems. These advances enable faster and more precise modeling of complex physical processes that would otherwise require computationally intensive numerical simulations. As noted by Brunton et al. (2020), ML techniques such as reduced-order modeling, flow control, and shape optimization have already shown great promise in fluid mechanics by bridging data-driven methods with first-principle approaches. In steady-state heat conduction studies, hybrid models combining convolutional neural networks (CNNs) with physics-based constraints, such as the Laplace equation, achieved a 60.9% faster convergence rate compared to traditional data-driven methods. These models not only reduced training time but also produced temperature fields that adhered more closely to physical laws, as indicated by lower residuals and smoother profiles.

PINNs further advanced this approach by embedding governing equations, including the Navier–Stokes or heat equations, directly into their loss functions. For example, in airfoil shape optimization, PINNs predicted flow fields with high precision while simultaneously optimizing design parameters using gradient-based methods, eliminating the need for expensive adjoint solvers and enhancing interpretability. Adaptive sampling during training further refined network predictions as optimal configurations were approached.

In engineering practice: Consider the design of a new heat exchanger for industrial applications. Traditional computational fluid dynamics (CFD) simulations might take days or weeks to evaluate a single design. With PINN-based surrogate models, engineers can explore thousands of design variations in hours, finding optimal configurations that maximize heat transfer while minimizing pressure drop and material costs. This dramatic acceleration in the design cycle translates directly to faster product development and more competitive solutions.

In comparison, purely data-driven models often struggle with generalization, particularly when extrapolating to new physical regimes or rare boundary conditions. Physics-informed models, however, provide robustness even with limited datasets, ensuring physical consistency and improved performance where labeled data is scarce. Applications of these methods span multiple fields: in materials science, neural network-based energy landscape modeling helps identify stable configurations and transition states, accelerating materials discovery; in chemistry, ML estimates thermodynamic properties such as entropy and enthalpy, enabling more accurate simulations of non-ideal systems; and in engineering, ML-driven surrogates enhance the efficiency and precision of fluid dynamics and heat transfer simulations. Collectively, these results underscore the growing impact of physics-informed ML approaches in transforming the modeling, prediction, and optimization of thermodynamic systems.

Emerging frontiers: The convergence of ML and thermodynamics is opening entirely new research directions. Scientists are now applying energy landscape concepts from physics to understand machine learning systems themselves, treating neural network training as a thermodynamic process with its own "temperature" and phase transitions. This cross-pollination of ideas is leading to more robust ML algorithms and deeper insights into both artificial and natural learning systems. In 2024, researchers developed frameworks that successfully bridge thermodynamic principles with ML to predict complex molecular properties, demonstrating that physics-guided AI consistently outperforms purely data-driven approaches in both accuracy and interpretability.

Conclusion

The integration of machine learning into thermodynamic modeling demonstrates substantial potential for advancing predictive accuracy, reducing computational demands, and enhancing adherence to physical laws. By combining data-driven learning with physics-based constraints, these approaches can capture complex, nonlinear relationships while maintaining physically consistent outcomes. The resulting models not only accelerate simulations of processes such as heat conduction, energy optimization, and fluid flow but also enable the exploration of vast design spaces in engineering and materials science. This synergy between physics and machine learning paves the way for more efficient discovery, optimization, and control in diverse scientific and industrial applications, marking a significant step toward truly intelligent computational tools in thermodynamics.

Looking ahead: As these methods mature and become more accessible through user-friendly software tools, we can expect widespread adoption across industries, from aerospace and automotive design to chemical processing and renewable energy. The ability to rapidly and accurately predict thermodynamic behavior will enable innovations that seemed impractical just a few years ago: ultra-efficient thermal management systems, novel materials with precisely engineered properties, and optimized industrial processes that minimize energy consumption and environmental impact. For engineers, researchers, and decision-makers, staying informed about these ML-enhanced thermodynamic tools isn't just academically interesting. It's becoming essential for maintaining competitive advantage in an increasingly energy-conscious world.

References

Popovic, M. Entropy 2017, 19 (11), 621. DOI: 10.3390/e19110621

Zhang, C.; Gao, W.; Gong, G. Int. J. Heat Technol. 2024, 42 (5), 1511–1520. DOI: 10.18280/ijht.420515

Scoggins, J. B. In Hypersonics: Advances in Global Research and Applications; Springer: Cham, 2023; pp 51–81. DOI: 10.1007/978-3-031-30936-6_3

Gao, H.; Sun, L.; Wang, J. X. arXiv preprint 2020, arXiv:2005.08119. DOI: 10.48550/arXiv.2005.08119

Ballard, A. J.; Das, R.; Martiniani, S.; Mehta, D.; Sagun, L.; Stevenson, J. D.; Wales, D. J. Theor. Chem. Acc. 2021, 140, 148. DOI: 10.1007/s00214-021-02834-w

Röder, K.; Wales, D. J. Phys. Chem. Chem. Phys. 2017, 19, 23144–23152. DOI: 10.1039/C7CP01108C

Hu, Z.; Jagtap, A. D.; Karniadakis, G. E.; Kawaguchi, K. Comput. Methods Appl. Mech. Eng. 2023, 412, 116042. DOI: 10.1016/j.cma.2023.116042

Still, S.; Sivak, D. A.; Bell, A. J.; Crooks, G. E. New J. Phys. 2017, 19, 089502. DOI: 10.1088/1367-2630/aa89ff

Address

Agartala, TR-IN 799003

Contact Us

© 2025 ReaxionLab - All Rights Reserved.